Building Tools for Agents: backtesting

Do you even beat LIBOR bro?

Imagine a while loop that spins up an AI agent with this system prompt: "Review LessonsLearned.md and build a stock trading ETL pipeline and model setup that would be profitable. If you fail, note down your findings in LessonsLearned.md" Let's call it the Vibe Trader. We're not just asking the reasoning AI to run a script; we're asking it to reason, build, evaluate, do AAR, and peacefully fire itself until Alpha is reached (even then, it's still fired). This is FLOPS-driven peak capitalism - exactly what linear algebra was invented for.

But how do we build the playground for such an agent? Giving it carte blanche to write code from scratch leads to an abundance of complexities. Trusting LLM to self-evaluate on future leakage in its ETL is foolhardy - but let's assume that's been controlled for. A more robust approach is to use Agent tool use so that the agent's reasoning is channeled into the most critical AI task: generate and test.

The Tool: run_backtest Runnable

To do its job, our agent needs a tool. We provide it this single endpoint: run_backtest(etl_v, model_v, trade_strat_v, X_test = X_test). Setting it up as part of the agent's tool kit as a Runnable is simple with LCEL. When the agent calls this function with a chosen configuration, the system runs a rigorous backtest on a held-out test partition of our historical data - ruthlessly devoid of any hallucination. The function then returns a structured JSON object containing the results.

# The agent gets this output...

{

"all_time_sharpe": 1.2,

"all_time_returns_pct": 35.5,

"rolling_sharpe_data": [...],

"rolling_win_rate_data": [...],

"max_drawdown_pct": -15.2,

"recent_trades": [

{ "ticker": "AAPL", "pnl": 150.2, "signal_strength": 0.88 },

{ "ticker": "GOOG", "pnl": -50.1, "signal_strength": 0.51 },

...

]

}

The Agent's Decision Framework: Beyond a Single Number

Now for the agent's core task: interpreting these results. We would want our agent to be not too excited over all-time returns - given possible (read: recent) black swan volatility... Our sophisticated agent knows that's not enough. It needs to evaluate the quality and robustness of the performance using a multi-faceted approach.

1. Is it profitable at all? (The Baseline Check)

The agent first looks at all_time_sharpe and all_time_returns_pct. If these are negative or near zero, the strategy is likely a non-starter. But this is just a coarse filter.

2. Is it consistently profitable? (The Robustness Check)

This is where rolling metrics are critical. A strategy might have a great overall Sharpe ratio simply because it got lucky on one or two massive trades, while bleeding money the rest of the time. This strategy is fragile and unlikely to generalize.

Devoid of a real investment principle system, the quant-driven agent must at least evaluate for Sharpe.

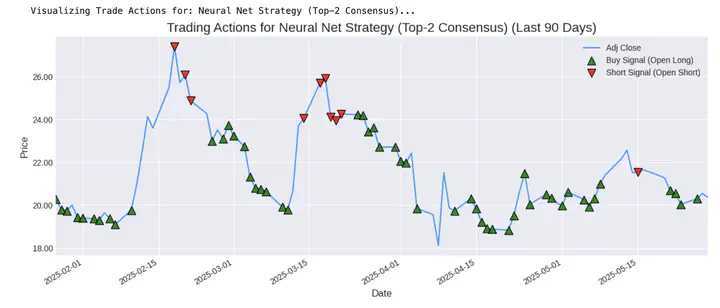

This strategy looks good on paper, but its rolling Sharpe ratio and rolling win rate shows there are some periods of loses. What underlies this? How can this be incorporated into LessonsLearned.md?

3. Is it still working now? (The Recency Check)

Markets evolve. A strategy that worked wonders two years ago might have lost all alpha today. The lending/payback cycles may have shortened compared to 2 decades ago. Market downturn cycles may have shortened with put options winners reinvesting their gains. The agent must inspect the recent_trades and the tail end of the rolling performance charts. Is there a sharp downturn? Does it seem like the "alpha" has decayed? This prevents deploying a strategy that is already failing.

What is the proper Prompt Engineering to conduct here to drive proper LessonsLearned insights to be derived?

The Last LangGraph Node: Exit Strategy

After weighing all this evidence, the agent makes a final decision by calling one of three conclusion functions:

GiveUp(reason="..."): The agent has hit its max_attempt count. The runway has dried up.IterateAndPivot(suggestion="..."): The agent updates LessonsLearned.md at the end with whether introducing a new data source in the ETL was helpful to get it to beat LIBOR or the market. For example: "Strategy branch 1 shows a stable edge and beat market returns until the last quarter. The underlying signals may be decaying too slowly. Suggestion: Re-try branch 1 with the same ETL but decrease the lookback window."AnnounceMeNextRenTech(confidence_score=0.95): This is the home run - but how long will this alpha last? Will you give it .05 of your port while alpha is still fresh, discard it, and call it a day? Do you want to keep spinning up agents to iteratively derive "fresh alpha" ad infinitum?

My POV is that LLMs are stochastic parrots. They perform much better than RNN, LSTM, apes, and others - in specific domains. So whatever hidden semantic pattern it is that is present in the combined universe of tokens that humans have transcribed - it can and will leverage. Words, pixels, wavelength... many things can be encoded. However, not everything can be one-hot into UTF. Not to mention, a purely quant approach has its own biases.

There is no free lunch. But there may be just enough crackers.